🌐 Your segmentation model's confidence might be lying to you

PLUS: Google's new Earth AI framework, plus IBM's green space simulations

Hey guys, here’s this week’s edition of the Spatial Edge — a safe space for sinusoidal fetishists. In any case, the aim is to make you a better geospatial data scientist in less than five minutes a week.

In today’s newsletter:

Segmentation Uncertainty: Deep learning models struggle with pixel-level confidence.

Earth AI: Google combines foundation models for geospatial analysis.

Urban Greening Simulation: IBM predicts park cooling effects before planting.

Burned Area Data: ESA provides monthly fire data at 25km resolution.

Building Density: TEMPO offers quarterly global building height estimates.

Research you should know about

1. How well can segmentation models know when they’re wrong?

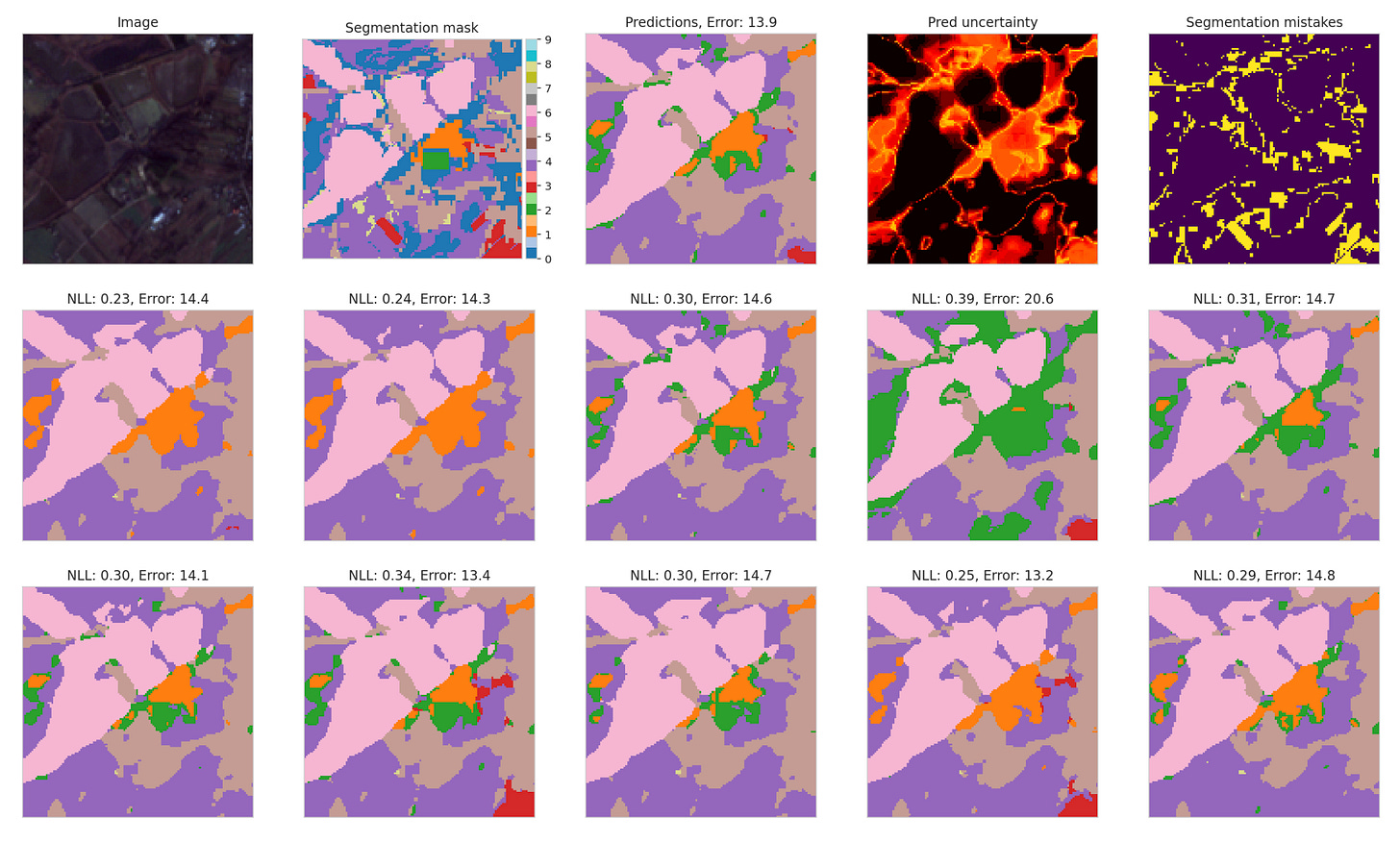

If you’ve worked with deep learning models for satellite image segmentation, you’ve probably wondered how much you can trust the model’s confidence scores. A new paper from Google DeepMind benchmarks different methods for estimating uncertainty in segmentation models trained on remote sensing data, and the findings are a bit of a mixed bag.

The authors tested a bunch of approaches (including simple probability-based metrics, ensemble models, and more sophisticated Stochastic Segmentation Networks) across two datasets: PASTIS (a French agricultural dataset with high-quality labels) and ForTy (a global forest types dataset with more variable label quality). The headline finding is that common uncertainty methods are pretty limited at identifying individual misclassified pixels at test time. However, they’re much better at flagging entire images that are likely to be poorly segmented, which could still be useful for quality control. Perhaps the most surprising result is that the choice of model architecture matters more than the choice of uncertainty method. Vision Transformer-based models substantially outperformed convolutional models (like UNET3D) at identifying segmentation errors. Ensembles gave the best overall segmentation accuracy, but Stochastic Segmentation Networks offered a good compromise between performance and efficiency, requiring only about 1% more parameters than a standard model while delivering competitive results.

There’s also a bit of an issue when it comes to noisy inputs. Models can only reliably identify corrupted pixels when the test-time noise level is much higher than what they saw during training. If you train with noise at the same level you encounter in the wild, the model basically adapts to it and loses its ability to flag those regions as problematic. The authors recommend setting aside a dedicated “uncertainty test set” for proper evaluation, since performance varies quite a bit across datasets and tasks.

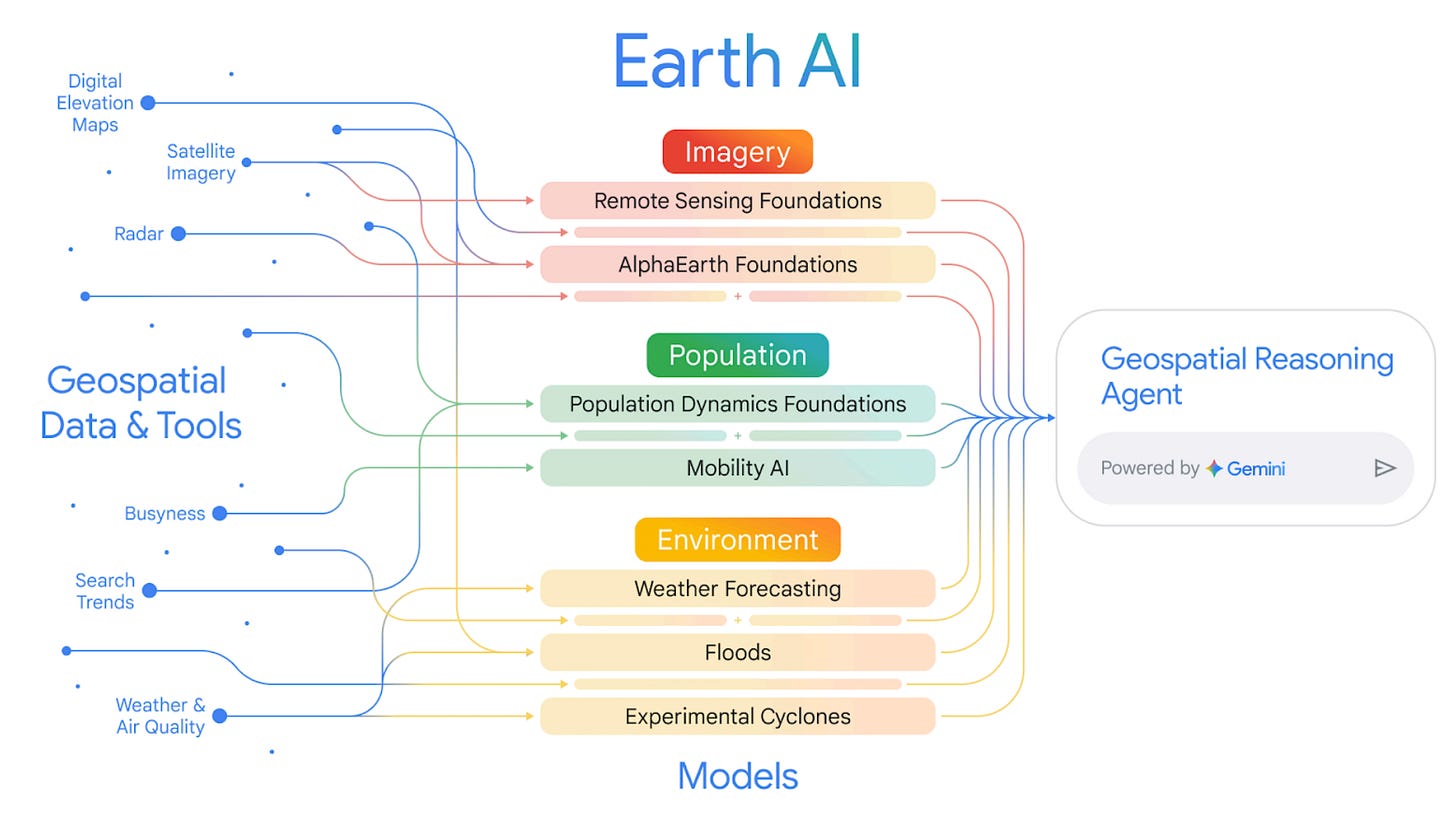

2. Google’s new ‘Earth AI’ framework

Google Research has released a working paper introducing ‘Earth AI’, a family of geospatial AI models paired with an agentic reasoning system. The main idea is to move beyond the traditional approach of using single, siloed models for specific tasks (like analysing satellite imagery or population data) and instead combine multiple foundation models that can ‘talk’ to each other. The framework is built on three pillars:

an Imagery model (for interpreting satellite and aerial photos),

a Population model (for understanding human behaviour and demographics), and

Environment models (for weather, floods, and cyclones).

A Gemini-powered ‘reasoning agent’ then orchestrates these models, allowing users to ask complex, multi-step questions in plain language rather than needing to manually download, join, and cross-reference datasets themselves.

The results are pretty compelling. Google’s Remote Sensing models achieved state-of-the-art performance on a bunch of benchmarks for tasks like zero-shot image classification and object detection. Their Population Dynamics model, which fuses maps data, search trends, and anonymised ‘busyness’ data, has been independently validated by external partners for applications ranging from predicting retail activity to forecasting dengue outbreaks in Brazil. Interestingly, the paper shows that combining these models produces better predictions than using any single one alone. For instance, using both Population and Imagery embeddings together led to an 11% improvement in predicting FEMA disaster risk scores compared to using either model on its own. In a retrospective analysis of Hurricane Ian, a model combining Google’s experimental cyclone forecasts with their Population embeddings predicted the number of damaged buildings to within 3% of the actual figure, three days before landfall.

The reasoning agent is where things get pretty interesting for real-world applications. Rather than just retrieving data, it can break down complex queries (like ‘identify Florida counties with populations over 20,000 that are forecast to experience hurricane-force winds’), delegate sub-tasks to the appropriate specialist model, and synthesise the results. On a benchmark of crisis-response scenarios, the agent significantly outperformed a baseline Gemini model without the custom geospatial tools. I think this is one further step towards the golden goose of being able to generate geospatial analyses without requiring a geospatial background.

3. Simulating urban greening before anyone picks up a shovel

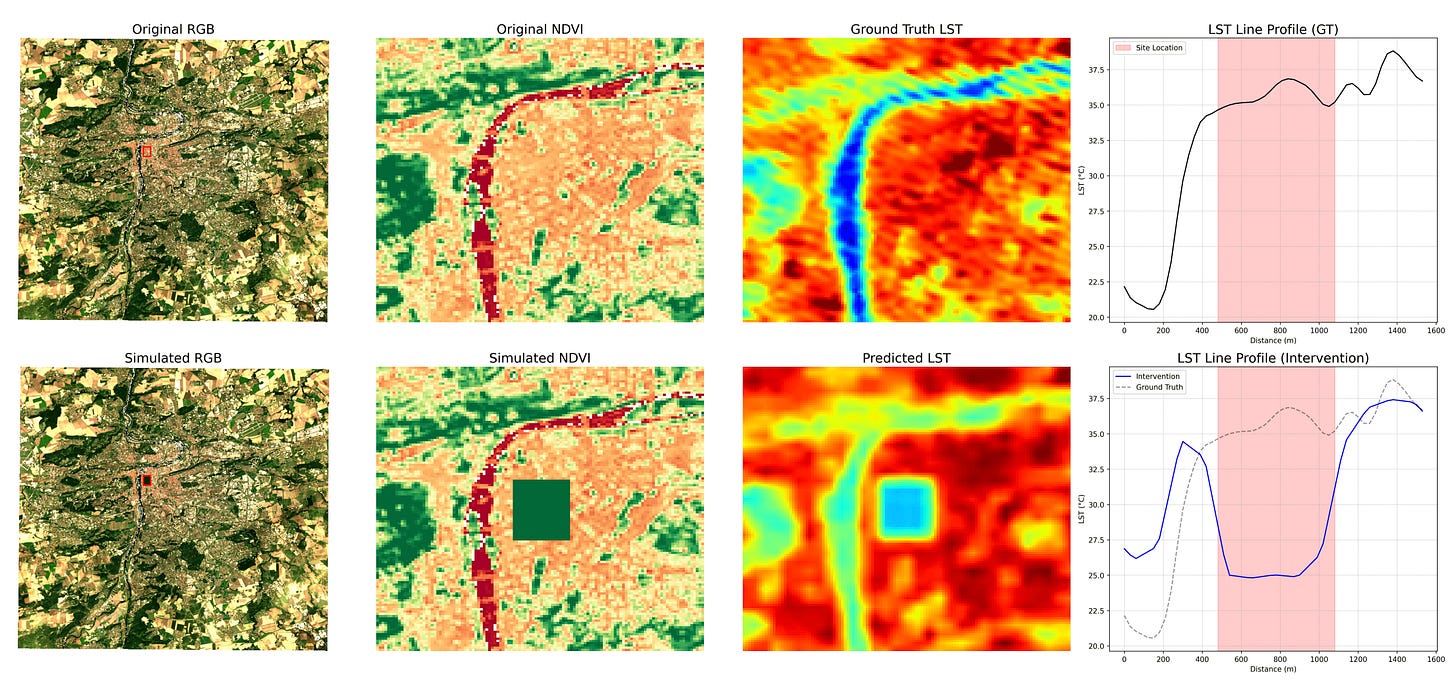

As cities get hotter, planners need good temperature data to figure out where to focus their cooling efforts. But there’s a bit of a problem. Traditional machine learning models need tons of local data to make accurate predictions, and that data often doesn’t exist for the cities that need it most. A new study from IBM Research tests whether geospatial foundation models (GFMs) can fill this gap. These models are trained on huge amounts of global satellite data, which means they can generalise to new cities with only minimal fine-tuning. The researchers used a GFM called Granite to predict land surface temperatures across 13 European cities that weren’t in the training set. They first established a ‘ground truth’ by measuring how parks actually cool down urban areas, both internally (within the park itself, temperatures dropped by up to 2.6°C) and through ‘spillover’ cooling into nearby built-up zones (up to 3.5°C cooler within 150 metres of a park edge).

The model did a pretty decent job replicating these cooling patterns. An improved version trained on more diverse cities reduced the error in predicting spillover cooling from 0.30°C to 0.20°C. They also tested how well the model could predict temperatures it had never seen by training it only on cooler days and then asking it to predict the hottest 10% of observations. It managed to extrapolate about 3.6°C beyond its training range with reasonable accuracy, which gave the researchers enough confidence to project future urban heat island intensification under different climate scenarios out to 2100.

The most interesting part, though, is the ‘inpainting’ simulation. The researchers digitally replaced a patch of built-up area in Prague with green space in the satellite imagery, adjusted the spectral indices accordingly, and ran the model to see what would happen to local temperatures. The model predicted both internal cooling within the hypothetical new park and spillover effects into the surrounding neighbourhood. It’s essentially a way to simulate urban greening interventions before anyone picks up a shovel, which could be pretty valuable for planners trying to decide where new parks would have the biggest impact.

Geospatial Datasets

1. Monthly burned area dataset

This dataset provides monthly mean burned area at 0.25° (~25 km) resolution, averaged across 2019–2024 and derived from the ESA FireCCI Sentinel-3 SYN v1.1 product.

2. Enhanced vegetation index composites

This dataset offers bimonthly Enhanced Vegetation Index (EVI) composites for 2020 at 250 m resolution, derived from MOD13Q1 and neatly organised into continental subsets. You can check out more on the data and code here.

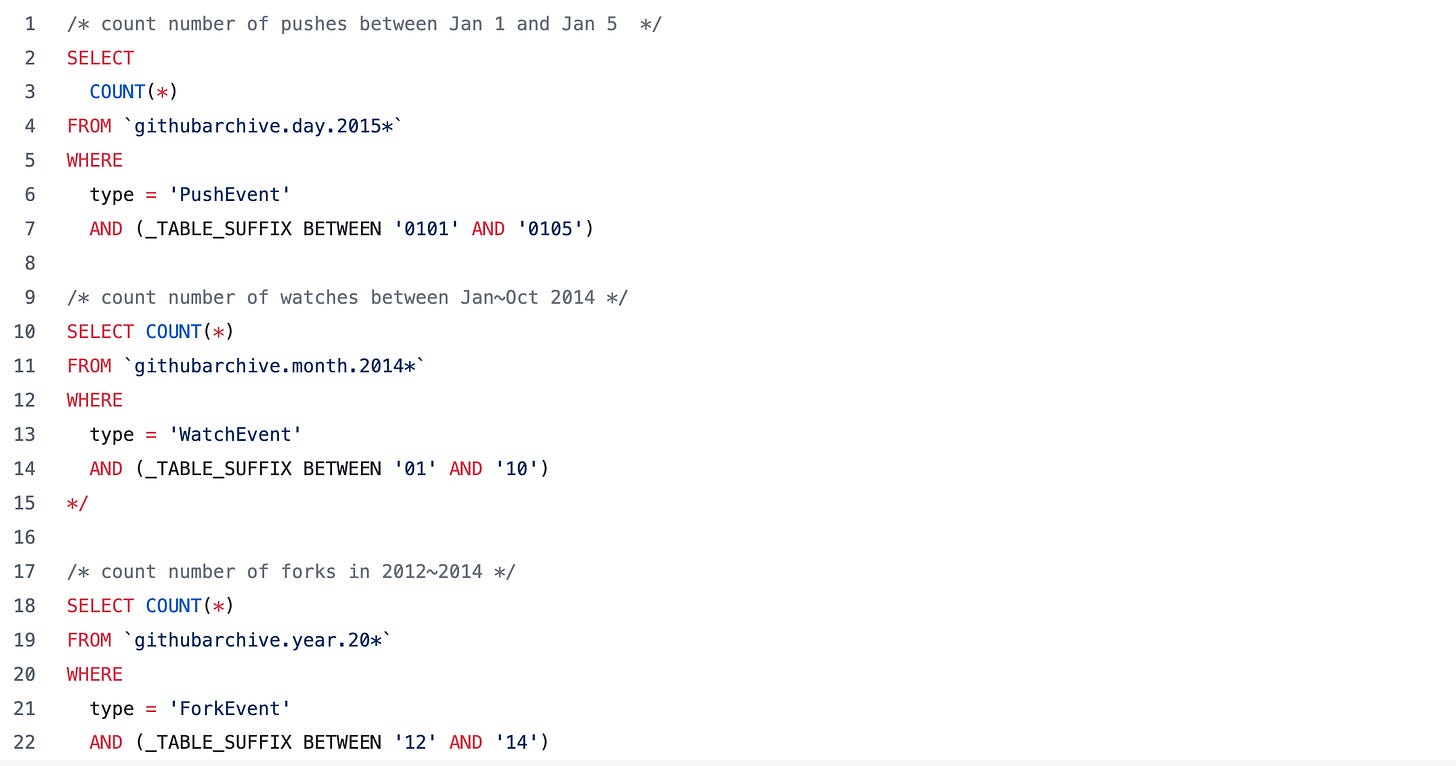

3. GitHub’s GH Archive

The GH Archive project provides a continuously updated, open record of all public GitHub activity, capturing millions of events, such as commits, issues, forks, and comments, across more than a decade.

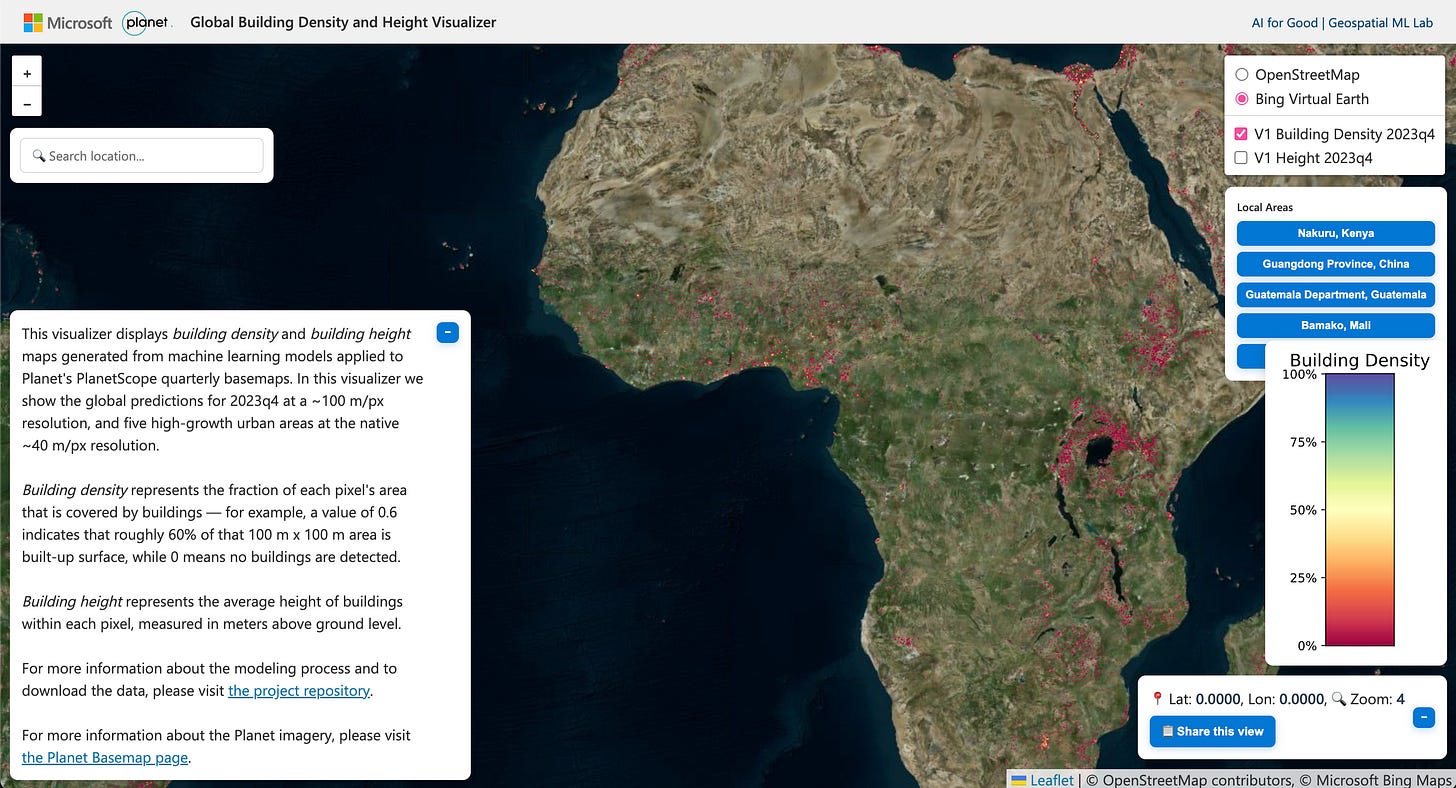

4. Building density and height estimates

TEMPO is a new global dataset and AI model that estimates building density and height from Planet imagery every three months. It provides a 2023 Q4 global layer at 100 m resolution, plus quarterly 40 m time-series datasets for several rapidly changing regions. You can check out the data and code here and the interactive map here.

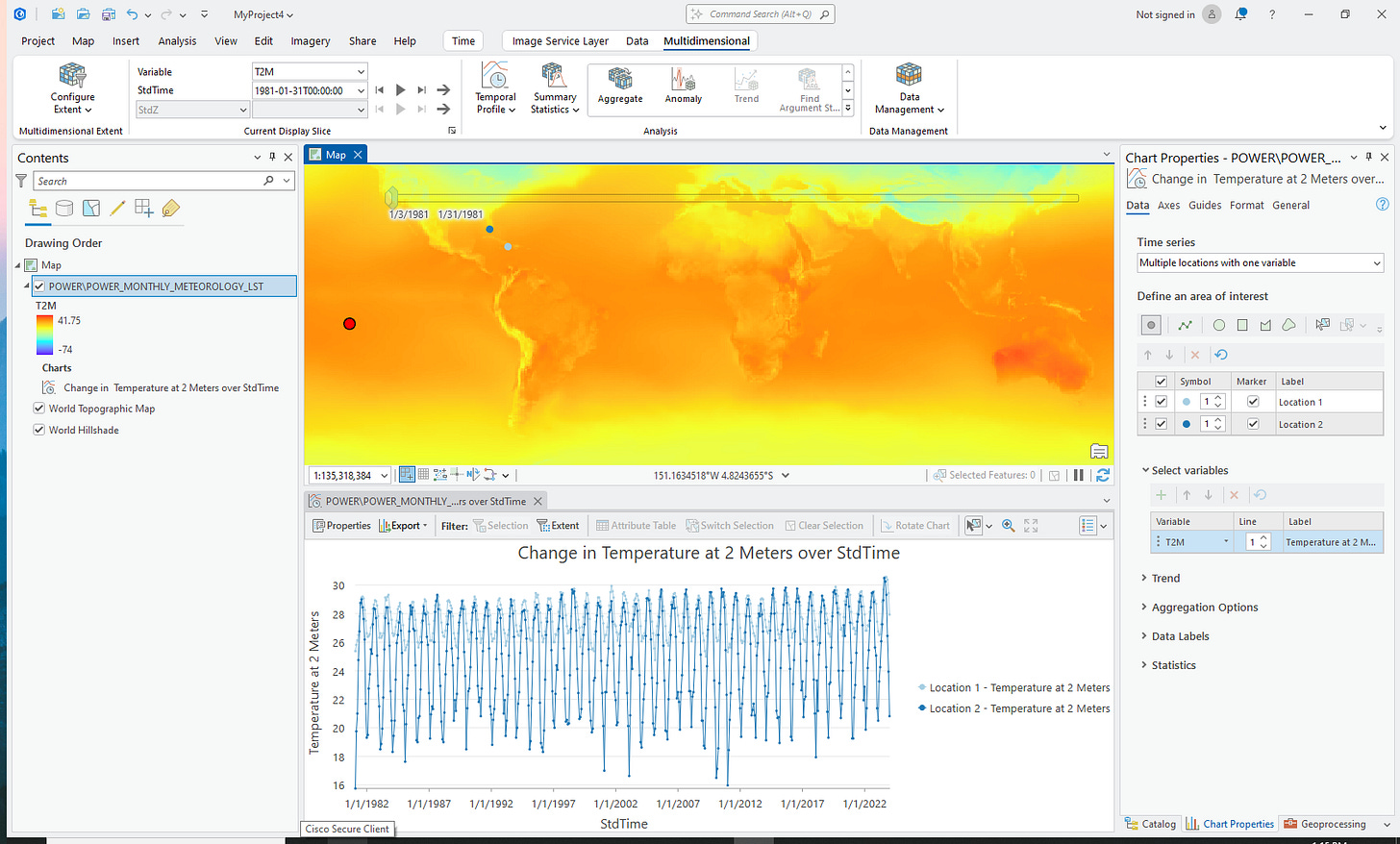

Other useful bits

NASA has released a new set of step-by-step tutorials to help GIS users bring Earth observation data to life, with guides for adding datasets, accessing web services, and building time-enabled mosaics in tools like ArcGIS Pro and QGIS. The resources draw on ECOSTRESS, MERRA-2 and other NASA datasets, making it easier than ever to explore and analyse environmental change.

GIS and satellite data are helping Red Cross teams take life-saving early action in conflict-affected regions like Mali. By blending satellite climate intelligence with community-collected data and conflict monitoring, practitioners can pinpoint vulnerable water sources, understand compound risks, and support more targeted, locally driven humanitarian decisions.

Thanks to Google’s Roads Management Insights, CERTH and NGIS can now track congestion, forecast traffic, and understand network bottlenecks with far greater precision. In Thessaloniki’s massive Flyover construction project, these tools revealed 40–50% longer travel times and fewer crashes, helping authorities reroute traffic, communicate expectations, and make smarter, data-driven decisions for the city’s travellers.

AlphaEarth Foundations’ 64-channel satellite embeddings are now publicly accessible as Cloud-Optimized GeoTIFFs on Google Cloud Storage, giving users flexible, high-performance access across tools like Earth Engine, BigQuery, Vertex AI, and GDAL. The dataset is neatly organized by year and UTM zone, comes with spatial index files for fast querying, and remains free under CC-BY, making large-scale geospatial AI workflows far easier to build and integrate.

Jobs

International Telecommunication Union (ITU) is looking for a remote Data Scientist under their Telecommunication Development Bureau.

UN ESCAP is looking for a Senior Consultant on Crop Biodiversity Assessment based in Indonesia.

UNHCR is looking for an Education Data and Evidence Officer based in Copenhagen.

The International Center for Agricultural Research in the Dry Areas (ICARDA) is looking for a remote Site Suitability and Crop Modeling Expert.

The Gates Foundation is looking for a Senior Strategy Officer, Geospatial Insights based in Seattle.

Just for Fun

NASA visualises a supernova (source).

That’s it for this week.

I’m always keen to hear from you, so please let me know if you have:

new geospatial datasets

newly published papers

geospatial job opportunities

and I’ll do my best to showcase them here.

Yohan

Fantastic synthesis of where geospatial foundation models actually stand. The DeepMind uncertainty paper finding that ViT-based architectures outperform CNNs at error detection is underappreciated, especially since most production pipelines still default to UNet variants for satelite segmentation. Google's Earth AI reasoning agent combining multiple FMs is the direction things need to go tho, siloed models miss context that cross-domain embeddings capture. The IBM inpainting simualtion is wild, basically turning urban planning into a ML sandbox game.